Least Recently Used (LRU)

Discards the least recently used items first. This algorithm requires keeping track of what was used when, which is expensive if one wants to make sure the algorithm always discards the least recently used item. General implementations of this technique require keeping "age bits" for cache-lines and track the "Least Recently Used" cache-line based on age-bits. In such an implementation, every time a cache-line is used, the age of all other cache-lines changes.

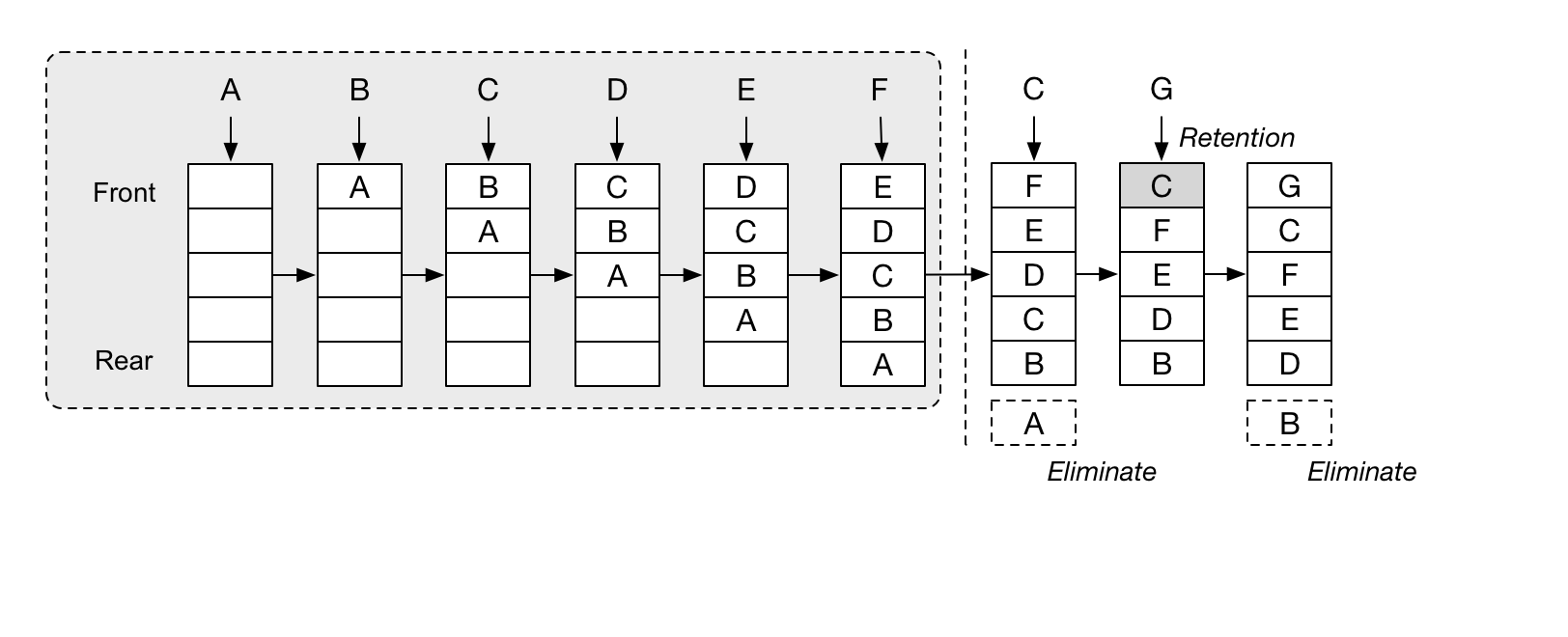

LRU Cache Elimination Process

Golang Implement: github.com/golang/groupcache/blob/master/lru/lru.go

Least-Frequently Used (LFU)

Least Frequently Used (LFU) is a type of cache algorithm used to manage memory within a computer. The standard characteristics of this method involve the system keeping track of the number of times a block is referenced in memory. When the cache is full and requires more room the system will purge the item with the lowest reference frequency.

The simplest method to employ an LFU algorithm is to assign a counter to every block that is loaded into the cache. Each time a reference is made to that block the counter is increased by one. When the cache reaches capacity and has a new block waiting to be inserted the system will search for the block with the lowest counter and remove it from the cache.

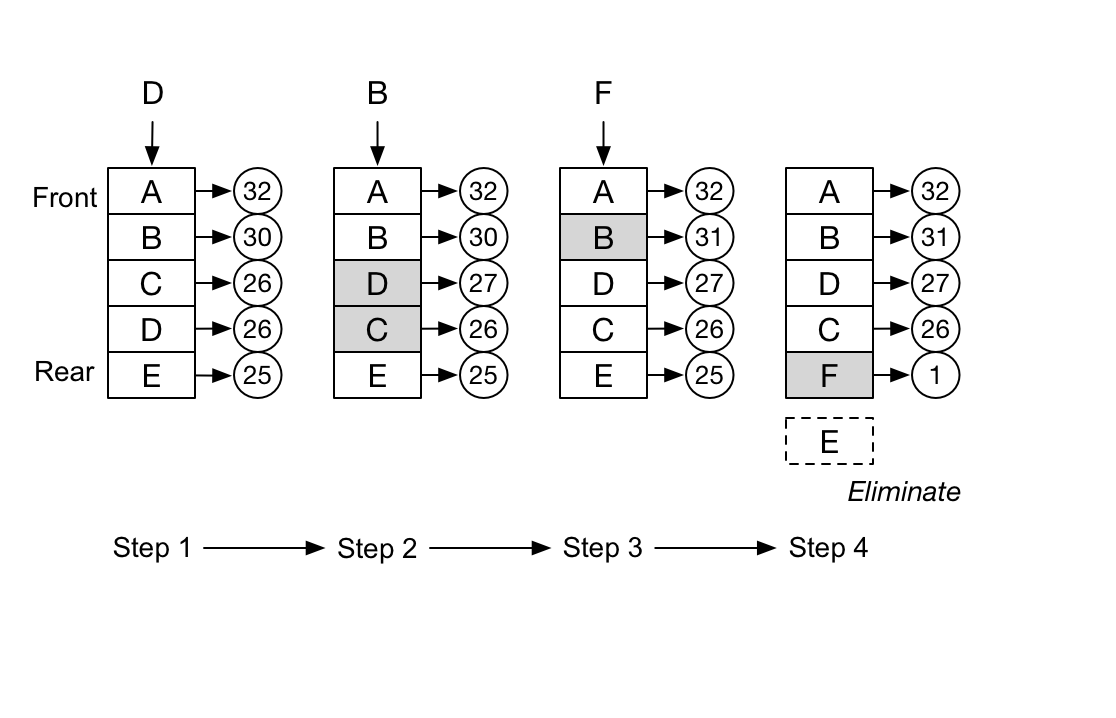

LFU Cache Elimination Process

Golang Implement: github.com/bluele/gcache/blob/master/lfu.go

The difference between LRU and LFU

For example, if cache size is 3, the data access sequence as:

set(2,2), set(1,1), get(2), get(1), get(2), set(3,3), set(4,4)

When set(4,4) the LFU algorithm will eliminated (3,3), LRU will eliminated (1,1).