Memcached, system of the distributed memory caching, is often used to increase the performance and availability of the hosted application through decreasing database load. It creates a common cache for all the application nodes and represents your application short-term memory.

Performance

Most of Memcache functionality (add, get, set, flush etc) are  . This means they are constant time functions. It does not matter how many items there are inside the cache, the functions will take just as long as they would with just 1 item inside the cache. Memcache uses the LRU algorithm to eliminate data for the slab.

. This means they are constant time functions. It does not matter how many items there are inside the cache, the functions will take just as long as they would with just 1 item inside the cache. Memcache uses the LRU algorithm to eliminate data for the slab.

Internally, all objects have a "counter". This counter holds a timestamp. Every time a new object is created, that counter will be set to the current time. When an object gets FETCHED, it will reset that counter to the current time as well. As soon as Memcache needs to "evict" an object to make room for newer objects, it will find the lowest counter. That is the object that isn't fetched or is fetched the longest time ago (and probably isn't needed that much, otherwise the counter would be closed to the current timestamp).

In effect, this creates a simple system that uses the cache very efficient. If it isn't used, it's kicked out of the system.

Memory Allocation

Memcached system uses the slab instead of the item-by-item memory allocation. As a result, it improves the usage of the memory and protects it from the fragmentation in case the data expires from the cache.

Each slab consists of several 1 MB size pages and each page, in its turn, consists of the equal amount of blocks or chunks. After every data storing the Memcached defines the data size and looks for a suitable allocation in all slabs. If such allocation exists, the data is written to it. If there is no suitable allocation, the Memcached creates a new slab and divides it into the blocks of the necessary size. In the case you update the already stored item and its new value exceeds the size of the block allocation, it was stored in before, Memcached moves it to another, suitable slab.

+---------------------------------------+

| Page |

+-------+-------+-------+-------+-------+ < Slab Class #1

| Chunk | Chunk | Chunk | Chunk | Chunk |

+-------+-------+-------+-------+-------+

+---------------------------------------+

| Page |

+-------+-------+-------+-------+-------+ < Slab Class #1

| Chunk | Chunk | Chunk | Chunk | Chunk |

+-------+-------+-------+-------+-------+

+---------------------------------------+

| Page |

+-------+-------+-------+-------+-------+ < Slab Class #2

| Chunk | Chunk | Chunk | Chunk | Chunk |

+-------+-------+-------+-------+-------+

+---------------------------------------+

| Page |

+-------+-------+-------+-------+-------+ < Slab Class #1

| Chunk | Chunk | Chunk | Chunk | Chunk |

+-------+-------+-------+-------+-------+

As a result, every instance has multiple pages distributed and allocated within the Memcached memory. This method of allocation prevents the memory fragmentation. But on the other hand it can cause the waste of memory if you have not enough amount of items with equal allocation size, i.e. there are only a few filled chunks on every page. Thus one more important point is the distribution of the stored items.

Reference slabs.c

typedef struct {

unsigned int size; /* sizes of items */

unsigned int perslab; /* how many items per slab */

void *slots; /* list of item ptrs */

unsigned int sl_curr; /* total free items in list */

unsigned int slabs; /* how many slabs were allocated for this class */

void **slab_list; /* array of slab pointers */

unsigned int list_size; /* size of prev array */

size_t requested; /* The number of requested bytes */

} slabclass_t;

Slab Allocation

When Memcache starts, it partitions its allocated memory into smaller parts called pages. Each page is 1MB large (coincidentally, the maximum size that an object can have you can store in Memcache). Each of those pages can be assigned to a slab-class or can be unassigned (being a free page). A slab-class decides how large the objects can be that are stored inside that particular page. Each page that is designated to a particular slab-class will be divided into smaller parts called chunks. The chunks in each slab have the same size so there cannot be 2 different sized chunks on the same page. For instance, there could be a page with 64byte chunks (slab class 1), a page with 128byte chunks (slab class 2) and so on, until we get the largest slab with only 1 chunk (the 1MB chunk). There can be multiple pages for each slab-class, but as soon as a page is assigned a slab-class (and thus, split up into chunks), it cannot be changed to another slab-class.

The smallest chunk-size starts at 80 bytes and increases with a factor of 1.25 (rounded up until the next power of 2). So the second smallest chunk size would be 100 etc. You can actually find it out by issuing the "-vv" flag when starting memcache. You can also set the factor (-f) and the initial chunk-size (-s), but unless you really know what you are doing, don't change the initial values.

$ memcached -vv slab class 1: chunk size 96 perslab 10922 slab class 2: chunk size 120 perslab 8738 slab class 3: chunk size 152 perslab 6898 slab class 4: chunk size 192 perslab 5461 slab class 5: chunk size 240 perslab 4369 slab class 6: chunk size 304 perslab 3449 slab class 7: chunk size 384 perslab 2730 slab class 8: chunk size 480 perslab 2184 slab class 9: chunk size 600 perslab 1747

Reference slabs.c

static void *do_slabs_alloc(const size_t size, unsigned int id, uint64_t *total_bytes,

unsigned int flags) {

slabclass_t *p;

void *ret = NULL;

item *it = NULL;

if (id < POWER_SMALLEST || id > power_largest) {

MEMCACHED_SLABS_ALLOCATE_FAILED(size, 0);

return NULL;

}

p = &slabclass[id];

assert(p->sl_curr == 0 || ((item *)p->slots)->slabs_clsid == 0);

if (total_bytes != NULL) {

*total_bytes = p->requested;

}

assert(size <= p->size);

/* fail unless we have space at the end of a recently allocated page,

we have something on our freelist, or we could allocate a new page */

if (p->sl_curr == 0 && flags != SLABS_ALLOC_NO_NEWPAGE) {

do_slabs_newslab(id);

}

if (p->sl_curr != 0) {

/* return off our freelist */

it = (item *)p->slots;

p->slots = it->next;

if (it->next) it->next->prev = 0;

/* Kill flag and initialize refcount here for lock safety in slab

* mover's freeness detection. */

it->it_flags &= ~ITEM_SLABBED;

it->refcount = 1;

p->sl_curr--;

ret = (void *)it;

} else {

ret = NULL;

}

if (ret) {

p->requested += size;

MEMCACHED_SLABS_ALLOCATE(size, id, p->size, ret);

} else {

MEMCACHED_SLABS_ALLOCATE_FAILED(size, id);

}

return ret;

}

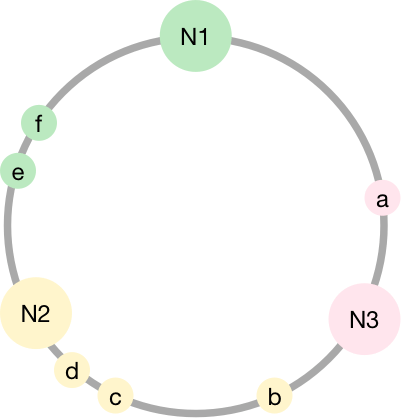

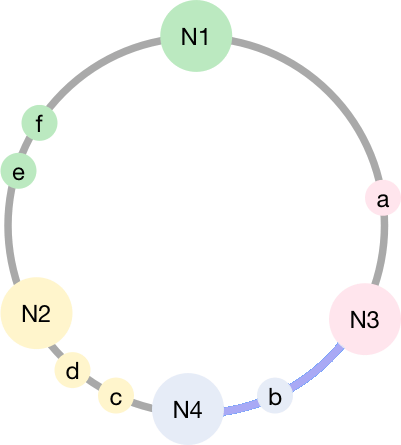

Consistent Hashing

- Remainder Hash

What memcache normally does is a simple, yet very effective load balance trick: for each key that gets stored or fetched, it will create a hash (you might see it as md5(key), but in fact, it's a more specialized - quicker - hash method). Now, the hashes we create are pretty much evenly distributed, so we can use a modulus function to find out which server to store the object to:

node_id = hash_key % len(nodes)

The trouble with this system: as soon as node_id (the number of servers) change, almost 100% of all keys will change server as well. Maybe some keys will get the same server id, but that would be a coincidence. In effect, when you change your memcache server count (either up or down, doesn't matter), you get a big stampede on your backend system since all keys are all invalidated at once.

- Consistent Hashing

Consistent hashing use a counter that acts like a clock. Hash values ![[0, 2^{32}-1]](https://xuri.me/wp-content/plugins/latex/cache/tex_3c2afd25c10796ecffdede257ed96342.gif) are distributed over the circle so that objects are instead assigned to the cache server that is closest in the clockwise direction.

are distributed over the circle so that objects are instead assigned to the cache server that is closest in the clockwise direction.

All nodes get a relatively equal number of keys, be able to add and remove nodes such as the fewest number of keys are moved around.

Memcached Statistics Commands

| Field | Sample value | Description |

| accepting_conns | 1 | The Memcached server is currently accepting new connections. |

| auth_cmds | 0 | Number of authentication commands processed by the server - if you use authentication within your installation. The default is IP (routing) level security which speeds up the actual Memcached usage by removing the authentication requirement. |

| auth_errors | 0 | Number of failed authentication tries of clients. |

| bytes | 6775829 | Number of bytes currently used for caching items, this server currently uses ~6 MB of it's maximum allowed (limit_maxbytes) 1 GB cache size. |

| bytes_read | 880545081 | Total number of bytes received from the network by this server. |

| bytes_written | 3607442137 | Total number of bytes send to the network by this server. |

| cas_badval | 0 | The cas command is some kind of Memcached's way to avoid locking. cas calls with bad identifier are counted in this stats key. |

| cas_hits | 0 | Number of successful cas commands. |

| cas_misses | 0 | cas calls fail if the value has been changed since it was requested from the cache. We're currently not using cas at all, so all three cas values are zero. |

| cmd_flush | 0 | The flush_all command clears the whole cache and shouldn't be used during normal operation. |

| cmd_get | 1626823 | Number of get commands received since server startup not counting if they were successful or not. |

| cmd_set | 2279784 | Number of set commands serviced since startup. |

| connection_structures | 42 | Number of internal connection handles currently held by the server. May be used as some kind of "maximum parallel connection count" but the server may destroy connection structures (don't know if he ever does) or prepare some without having actual connections for them (also don't know if he does). 42 maximum connections and 34 current connections (curr_connections) sounds reasonable, the live servers also have about 10% more connection_structures than curr_connections. |

| conn_yields | 1 | Memcached has a configurable maximum number of requests per event (-R command line argument), this counter shows the number of times any client hit this limit. |

| curr_connections | 34 | Number of open connections to this Memcached server, should be the same value on all servers during normal operation. This is something like the count of MySQL's SHOW PROCESSLIST result rows. |

| curr_items | 30345 | Number of items currently in this server's cache. The production system of this development environment holds more than 8 million items. |

| decr_hits | 0 | The decr command decreases a stored (integer) value by 1. A hit is a decr call to an existing key. |

| decr_misses | 0 | "decr" command calls to undefined keys. |

| delete_hits | 138707 | Stored keys may be deleted using the delete command, this system doesn't delete cached data itself, but it's using the Memcached to avoid recaching-races and the race keys are deleted once the race is over and fresh content has been cached. |

| delete_misses | 107095 | Number of delete commands for keys not existing within the cache. These 107k failed deletes are deletions of non existent race keys (see above). |

| evictions | 0 | Number of objects removed from the cache to free up memory for new items because Memcached reached it's maximum memory setting (limit_maxbytes). |

| get_hits | 391283 | Number of successful get commands (cache hits) since startup, divide them by the cmd_get value to get the cache hitrate: This server was able to serve 24% of it's get requests from the cache, the live servers of this installation usually have more than 98% hits. |

| get_misses | 1235540 | Number of failed get requests because nothing was cached for this key or the cached value was too old. |

| incr_hits | 0 | Number of successful incr commands processed. incr is a replace adding 1 to the stored value and failing if no value is stored. This specific installation (currently) doesn't use incr/decr commands, so all their values are zero. |

| incr_misses | 0 | Number of failed incr commands (see incr_hits). |

| limit_maxbytes | 1073741824 | Maximum configured cache size (set on the command line while starting the memcached server), look at the "bytes" value for the actual usage. |

| listen_disabled_num | 0 | Number of denied connection attempts because memcached reached it's configured connection limit ("-c" command line argument). |

| pid | 24040 | Current process ID of the Memcached task. |

| pointer_size | 64 | Number of bits of the hostsystem, may show "32" instead of "64" if the running Memcached binary was compiled for 32 bit environments and is running on a 64 bit system. |

| reclaimed | 14740 | Numer of times a write command to the cached used memory from another expired key. These are not storage operations deleting old items due to a full cache. |

| rusage_system | 310.030000 | Number of system time seconds for this server process. |

| rusage_user | 103.230000 | Numer of user time seconds for this server process. |

| threads | 4 | Number of threads used by the current Memcached server process. |

| time | 1323008181 | Current unix timestamp of the Memcached's server. |

| total_connections | 27384 | Numer of successful connect attempts to this server since it has been started. Roughly $number_of_connections_per_task * $number_of_webserver_tasks * $number_of_webserver_restarts. |

| total_items | 323615 | Numer of items stored ever stored on this server. This is no "maximum item count" value but a counted increased by every new item stored in the cache. |

| uptime | 1145873 | Numer of seconds the Memcached server has been running since last restart. 1145873 / (60 * 60 * 24) = ~13 days since this server has been restarted |

| version | 1.5.2 | Version number of the server |