About Installation and create RAID, please refer to Install Software RAID10 on Ubuntu 12.04 LTS Server

Part 1 Remove device from RAID

Remove the device being used does not allow, if you want to remove, must first be marked as fail. If you have a RAID device fails, you need to remove, also need to mark it as fail.

1 Remove a single RAID physical volume

Assuming an exception occurs partition sda1, we should to remove it.

$ sudo mdadm /dev/md0 --fail /dev/sda1 --remove /dev/sda1 mdadm: set /dev/sda1 faulty in /dev/md0 mdadm: hot removed /dev/sda1 from /dev/md0

If you intend to remove the device cleared so for other purposes, then you must be superblock clear away, otherwise, the system recognizes that the device is still part of a RAID array.

part

$ sudo mdadm --zero-superblock /dev/sda1

2 Remove the entire hard drive

If you want to remove the entire hard drive, need to put on this hard drive to remove all physical volumes RAID.

For example, we want to completely remove the first hard disk sda, should mark sda1, sda2, sda3 needs as fail, and then remove the entire hard drive.

$ sudo mdadm /dev/md0 --fail /dev/sda1 --remove /dev/sda1 mdadm: set /dev/sda1 faulty in /dev/md0 mdadm: hot removed /dev/sda1 from /dev/md0 $ sudo mdadm /dev/md1 --fail /dev/sda2 --remove /dev/sda2 mdadm: set /dev/sda2 faulty in /dev/md1 mdadm: hot removed /dev/sda2 from /dev/md1 $ sudo mdadm /dev/md2 --fail /dev/sda3 --remove /dev/sda3 mdadm: set /dev/sda3 faulty in /dev/md2 mdadm: hot removed /dev/sda3 from /dev/md2

Now, if the server supports hot-swappable, you can pull out a piece of this hard disk.

Part 2 Add existing RAID physical volume

If you want to add already created RAID physical volume, such as we have just removed sda1, sda2, sda3.

$ sudo mdadm /dev/md0 --add /dev/sda1 mdadm: added /dev/sda1 $ sudo mdadm /dev/md1 --add /dev/sda2 mdadm: added /dev/sda2 $ sudo mdadm /dev/md2 --add /dev/sda3 mdadm: added /dev/sda3

Part 3 Replace the new hard drive

1 Remove the bad hard drive

$ sudo mdadm /dev/md0 --fail /dev/sda1 --remove /dev/sda1 $ sudo mdadm /dev/md1 --fail /dev/sda2 --remove /dev/sda2 $ sudo mdadm /dev/md2 --fail /dev/sda3 --remove /dev/sda3

Removed, check RAID status

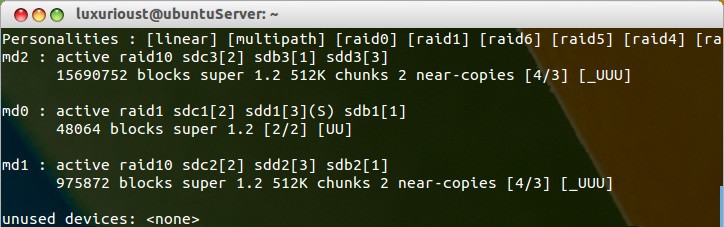

cat /proc/mdstat

Now, if the server supports hot-swappable, you can pull out a piece of this hard disk.

2 Insert the new hard drive

While the first one is removed from the RAID disk, but the system can still be started. Because sdb became the first hard disk, and now grub configuration hd0, actually sdb.

If you are in a real server to do this experiment, and the server supports hot-swappable, not need to restart, we can pull out a piece of this hard disk, and insert the new hard drive.

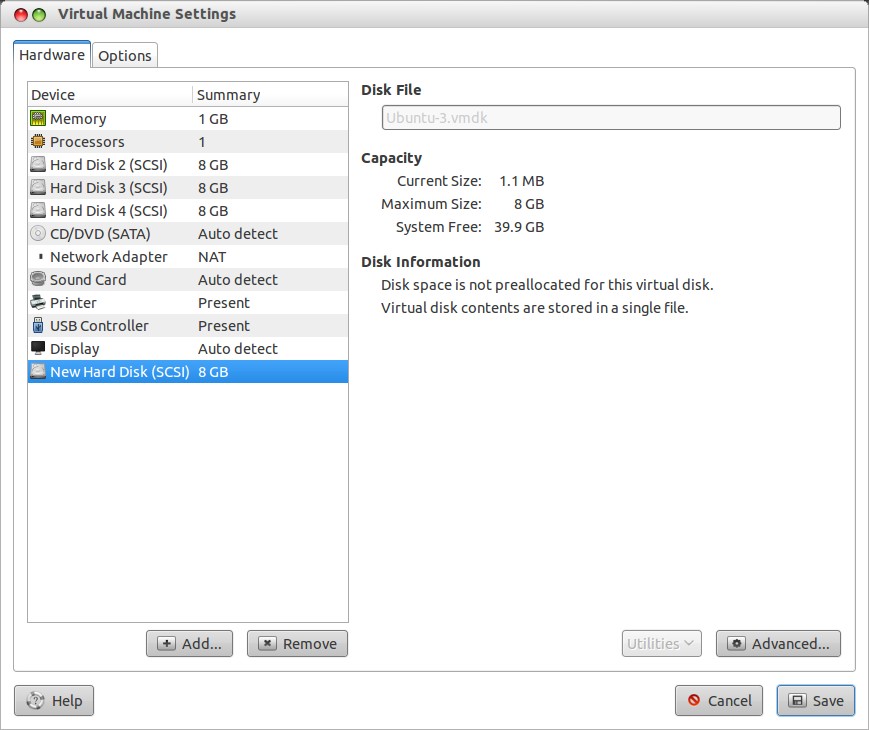

Because I was doing experiments in the VMware, does not support hot-swappable, so adding a new hard drive must be shut down.

$ sudo poweroff

After shutdown, in the VMware, first add a new disk, then delete the original first bad hard drive, if you remove the old hard drive first and then add a new hard disk, VMware will set the new hard drive as SCSI0:0, for Linux is sda, because the new hard drive has not grub, so it will cause the system fails to start.

Boot after add a new hard disk, after the system starts, view existing hard stats

$ sudo fdisk -l Disk /dev/sda: 8589 MB, 8589934592 bytes 255 heads, 63 sectors/track, 1044 cylinders, total 16777216 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x000a0d0e Device Boot Start End Blocks Id System /dev/sda1 * 2048 98303 48128 fd Linux raid autodetect /dev/sda2 98304 1075199 488448 fd Linux raid autodetect /dev/sda3 1075200 16775167 7849984 fd Linux raid autodetect Disk /dev/sdb: 8589 MB, 8589934592 bytes 255 heads, 63 sectors/track, 1044 cylinders, total 16777216 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x000ef169 Device Boot Start End Blocks Id System /dev/sdb1 2048 98303 48128 fd Linux raid autodetect /dev/sdb2 98304 1075199 488448 fd Linux raid autodetect /dev/sdb3 1075200 16775167 7849984 fd Linux raid autodetect Disk /dev/sdd: 8589 MB, 8589934592 bytes 255 heads, 63 sectors/track, 1044 cylinders, total 16777216 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/sdd doesn't contain a valid partition table Disk /dev/sdc: 8589 MB, 8589934592 bytes 255 heads, 63 sectors/track, 1044 cylinders, total 16777216 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x0002acaf Device Boot Start End Blocks Id System /dev/sdc1 2048 98303 48128 fd Linux raid autodetect /dev/sdc2 98304 1075199 488448 fd Linux raid autodetect /dev/sdc3 1075200 16775167 7849984 fd Linux raid autodetect Disk /dev/md1: 999 MB, 999292928 bytes 2 heads, 4 sectors/track, 243968 cylinders, total 1951744 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 524288 bytes / 1048576 bytes Disk identifier: 0x00000000 Disk /dev/md1 doesn't contain a valid partition table Disk /dev/md0: 49 MB, 49217536 bytes 2 heads, 4 sectors/track, 12016 cylinders, total 96128 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00000000 Disk /dev/md0 doesn't contain a valid partition table Disk /dev/md2: 16.1 GB, 16067330048 bytes 2 heads, 4 sectors/track, 3922688 cylinders, total 31381504 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 524288 bytes / 1048576 bytes Disk identifier: 0x00000000 Disk /dev/md2 doesn't contain a valid partition table

You should see the original three hard drives, the location is moved forward, sdb becomes sda, new hard drivers becomes sdd.

3 New hard disk partition

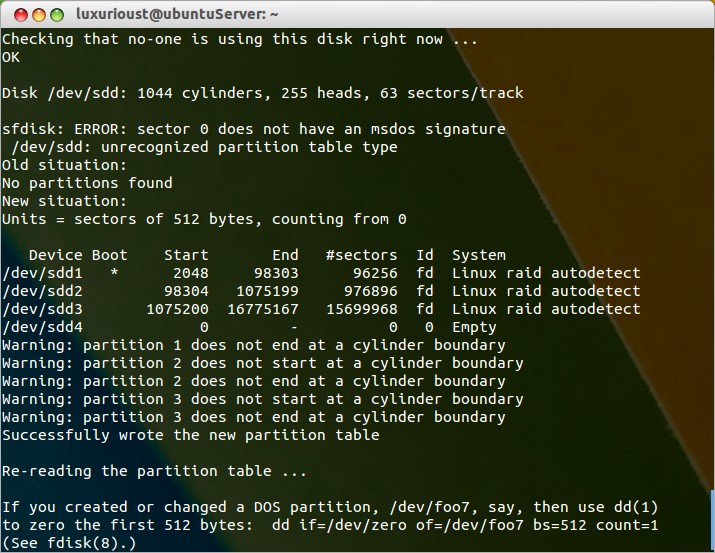

Copy the existing hard drive partition information to the new hard driver.

$ sudo sfdisk -d /dev/sda | sudo sfdisk /dev/sdd

4 Adding a new RAID partition

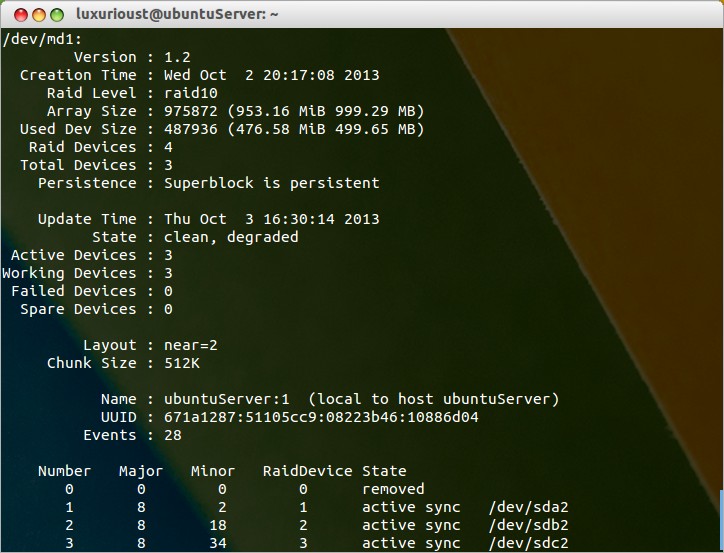

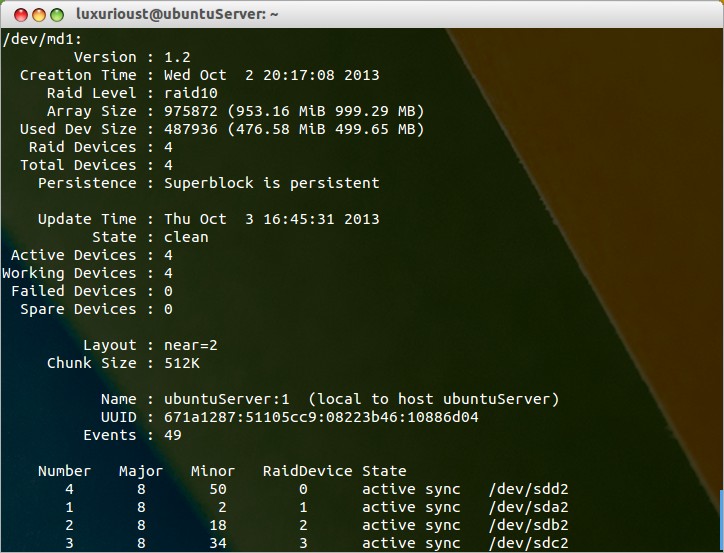

We check details md1 before adding a new RAID partition.

$ sudo mdadm --detail /dev/md1

Add the sdd2 to md1

$ sudo mdadm /dev/md1 --add /dev/sdd2

After executing a command, mdadm will re-establish md1, the reconstruction process is not the same length of time, the end of the thick of the state should be like this.

Then we can rebuild the md0 and md1

$ sudo mdadm /dev/md0 --add /dev/sdd1 $ sudo mdadm /dev/md2 --add /dev/sdd3

5 Setting grub

Finally, you need Setting grub, otherwise the system will not boot.

$ sudo apt-get install grub sudo grub grub> root (hd3,0) grub> setup (hd3) grub> quit

If you are in a real server to do this experiment, new hard driver is sda, grub should be installed on hd0.

Well, now the new hard drive has been added to the RAID, replace the original faulty hard driver.