As we know there are many crawlers and spider, such as Googlebot, Baiduspider. But there are also some crawlers not compliance the rules of robots. That will take more pressure for the server. So we should do something to anti these bad spider. In this post I will show you how to adnti bad bots and crawlers with Apache or Nginx and PHP.

Apache

Edit site conf file as the following code, after save configuration file reload Apache service.

<Directory "/var/www">

# Anti crawlers

SetEnvIfNoCase User-Agent ".*(^$|FeedDemon|JikeSpider|Indy Library|Alexa Toolbar|AskTbFXTV|AhrefsBot|CrawlDaddy|CoolpadWebkit|Java|Feedly|UniversalFeedParser|ApacheBench|Microsoft URL Control|Swiftbot|ZmEu|oBot|jaunty|Python-urllib|lightDeckReports Bot|YYSpider|DigExt|YisouSpider|HttpClient|MJ12bot|heritrix|EasouSpider|Ezooms)" BADBOT

Deny from env=BADBOT

Order Allow,Deny

Allow from all

</Directory>

Nginx

Create agent_deny.conf file in conf directory of Nginx like this:

# Forbid crawlers such as Scrapy

if ($http_user_agent ~* (Scrapy|Curl|HttpClient)) {

return 403;

}

# Disable specify UA and empty UA access

if ($http_user_agent ~ "FeedDemon|JikeSpider|Indy Library|Alexa Toolbar|AskTbFXTV|AhrefsBot|CrawlDaddy|CoolpadWebkit|Java|Feedly|UniversalFeedParser|ApacheBench|Microsoft URL Control|Swiftbot|ZmEu|oBot|jaunty|Python-urllib|lightDeckReports Bot|YYSpider|DigExt|YisouSpider|HttpClient|MJ12bot|heritrix|EasouSpider|Ezooms|^$" ) {

return 403;

}

# Forbid crawlers except GET|HEAD|POST method

if ($request_method !~ ^(GET|HEAD|POST)$) {

return 403;

}

Add following in location / section of the site, after save configuration file reload Nginx service.

include agent_deny.conf;

PHP

Set following code in index file.

<?php

// ...

/*

|--------------------------------------------------------------------------

| Anti Crawlers

|--------------------------------------------------------------------------

|

*/

if(isset($_SERVER['HTTP_USER_AGENT']))

{

$badAgents = array('FeedDemon','BOT/0.1 (BOT for JCE)','CrawlDaddy ','Java','Feedly','UniversalFeedParser','ApacheBench','Swiftbot','ZmEu','Indy Library','oBot','jaunty','YandexBot','AhrefsBot','YisouSpider','jikeSpider','MJ12bot','WinHttp','EasouSpider','HttpClient','Microsoft URL Control','YYSpider','jaunty','Python-urllib','lightDeckReports Bot');

if(in_array($_SERVER['HTTP_USER_AGENT'],$badAgents)) {

exit('Go away');

}

} else {

exit('Go away');

}

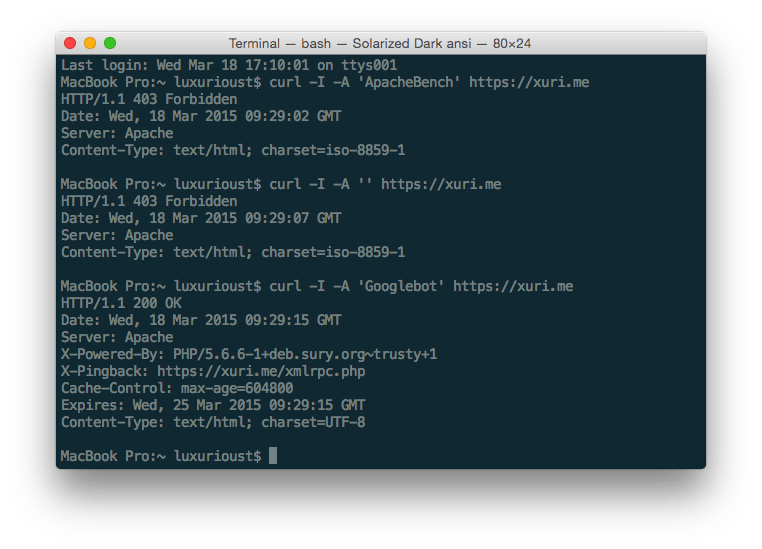

Test

Use CURL command to simulate crawlers

Simulate ApacheBench

$ curl -I -A 'ApacheBench' https://xuri.me

Simulate empty USER_AGENT

$ curl -I -A '' https://xuri.me

Simulate Googlebot

$ curl -I -A 'Googlebot' https://xuri.me

We can get list of User-Agent from user-agents.org.